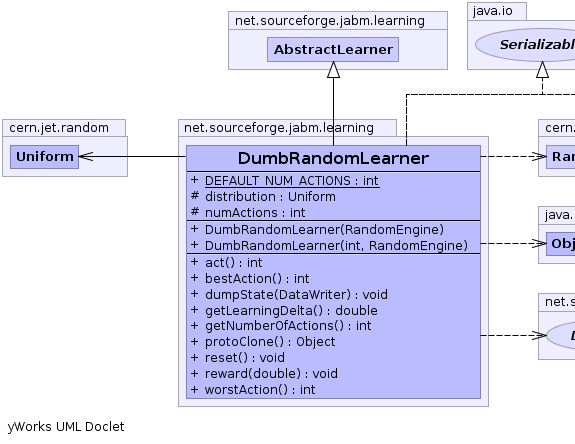

net.sourceforge.jabm.learning

Class DumbRandomLearner

java.lang.Object

net.sourceforge.jabm.learning.AbstractLearner

net.sourceforge.jabm.learning.AbstractLearner

net.sourceforge.jabm.learning.DumbRandomLearner

net.sourceforge.jabm.learning.DumbRandomLearner

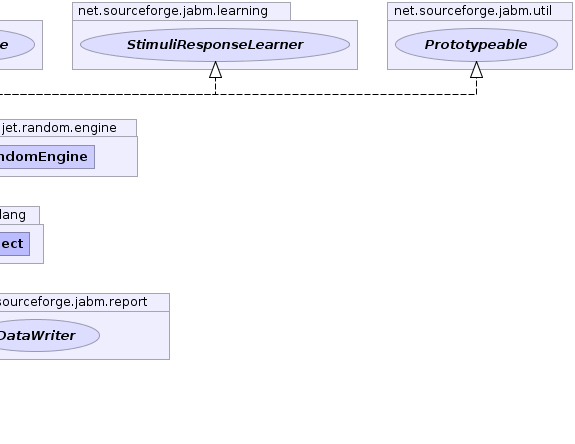

- All Implemented Interfaces:

- java.io.Serializable, java.lang.Cloneable, DiscreteLearner, Learner, StimuliResponseLearner, Prototypeable

public class DumbRandomLearner

- extends AbstractLearner

- implements StimuliResponseLearner, java.io.Serializable, Prototypeable

A learner that simply plays a random action on each iteration without any

learning. This is useful for control experiments.

- See Also:

- Serialized Form

-

-

|

Method Summary |

int |

act()

Request that the learner perform an action. |

int |

bestAction()

|

void |

dumpState(DataWriter out)

Write out our state data to the specified data writer. |

double |

getLearningDelta()

Return a value indicative of the amount of learning that occured during the

last iteration. |

int |

getNumberOfActions()

Get the number of different possible actions this learner can choose from

when it performs an action. |

java.lang.Object |

protoClone()

|

void |

reset()

|

void |

reward(double reward)

Reward the learning algorithm according to the last action it chose. |

int |

worstAction()

|

| Methods inherited from class java.lang.Object |

clone, equals, finalize, getClass, hashCode, notify, notifyAll, toString, wait, wait, wait |

| Methods inherited from interface net.sourceforge.jabm.learning.Learner |

monitor |

numActions

protected int numActions

distribution

protected cern.jet.random.Uniform distribution

DEFAULT_NUM_ACTIONS

public static final int DEFAULT_NUM_ACTIONS

- See Also:

- Constant Field Values

DumbRandomLearner

public DumbRandomLearner(cern.jet.random.engine.RandomEngine prng)

DumbRandomLearner

public DumbRandomLearner(int numActions,

cern.jet.random.engine.RandomEngine prng)

protoClone

public java.lang.Object protoClone()

- Specified by:

protoClone in interface Prototypeable

reset

public void reset()

act

public int act()

- Description copied from interface:

DiscreteLearner

- Request that the learner perform an action. Users of the learning algorithm

should invoke this method on the learner when they wish to find out which

action the learner is currently recommending.

- Specified by:

act in interface DiscreteLearner

- Returns:

- An integer representing the action to be taken.

getLearningDelta

public double getLearningDelta()

- Description copied from interface:

Learner

- Return a value indicative of the amount of learning that occured during the

last iteration. Values close to 0.0 indicate that the learner has converged

to an equilibrium state.

- Specified by:

getLearningDelta in interface Learner- Specified by:

getLearningDelta in class AbstractLearner

- Returns:

- A double representing the amount of learning that occured.

dumpState

public void dumpState(DataWriter out)

- Description copied from interface:

Learner

- Write out our state data to the specified data writer.

- Specified by:

dumpState in interface Learner- Specified by:

dumpState in class AbstractLearner

getNumberOfActions

public int getNumberOfActions()

- Description copied from interface:

DiscreteLearner

- Get the number of different possible actions this learner can choose from

when it performs an action.

- Specified by:

getNumberOfActions in interface DiscreteLearner

- Returns:

- An integer value representing the number of actions available.

reward

public void reward(double reward)

- Description copied from interface:

StimuliResponseLearner

- Reward the learning algorithm according to the last action it chose.

- Specified by:

reward in interface StimuliResponseLearner

bestAction

public int bestAction()

- Specified by:

bestAction in interface StimuliResponseLearner

worstAction

public int worstAction()

- Specified by:

worstAction in interface StimuliResponseLearner

net.sourceforge.jabm.learning.AbstractLearner

net.sourceforge.jabm.learning.AbstractLearner

net.sourceforge.jabm.learning.DumbRandomLearner

net.sourceforge.jabm.learning.DumbRandomLearner

net.sourceforge.jabm.learning.AbstractLearner

net.sourceforge.jabm.learning.DumbRandomLearner